HBase is designed from the ground up to provide scalability and partitioning to enable efficient data structure serialization, storage and retrieval.

Apache HBase is a column-oriented key/value data store built to run on top of the Hadoop Distributed File System (HDFS) A non-relational (NoSQL) database that runs on top of HDFS Provides real-time read/write access to those large datasets Provides random, real time access to your data in Hadoop Great choice to store multi-structured or sparse data Low latency storage Versioned database Moving to HBase Installation. First of all installing HBase in standalone mode.

HBase is an open-source, distributed, multi-dimensional, and NoSQL database. It is designed to achieve high throughput and low latency. It provides fast and random read/write functionality on substantial datasets. It provides the bloom filter data structure, which fulfills the requirement of fast and random read-write. The HBase runs on the top of HDFS and provides all capabilities of a large table to Hadoop. HBase stores files on HDFS. It has the capabilities to store and process a billion rows of data at a time. Due to the dynamic feature of HBase, its storage capacity can be increased at any time .

HBase leverages Hadoop infrastructure like HDFS and Zookeeper. Zookeeper co-ordinates with HMaster to handle the region server. A region server has a collection of regions. The region server is responsible for the handling, managing, and reading/writing functions for the region. A region has data of all columns/qualifiers of column families. A HBase table can be divided into many regions where each region server handles a collection of regions. HBase became famous due to its features of fast and random read/write operation. Many companies adopted HBase because the current problem is handling big data with fast processing, and HBase is a good option.

The HBase framework offers several methods for extracting, manipulating, and storing big data. This tool has progressed in recent years due to its dynamic architecture and promotes data integrity, scalability, fault-tolerance, and easy-to-use methods. All of these variables have contributed to the popularity of Apache Hadoop, both in academia and in businesses. Most of the companies are using Apache HBase to store their dataset. They have changed the storage architecture according to the parameters of datasets.

HBase is a column-oriented database that’s an open-source implementation of Google’s Big Table storage architecture. It can manage structured and semi-structured data and has some built-in features such as scalability, versioning, compression and garbage collection.

Since its uses write-ahead logging and distributed configuration, it can provide fault-tolerance and quick recovery from individual server failures. HBase built on top of Hadoop / HDFS and the data stored in HBase can be manipulated using Hadoop’s MapReduce capabilities.

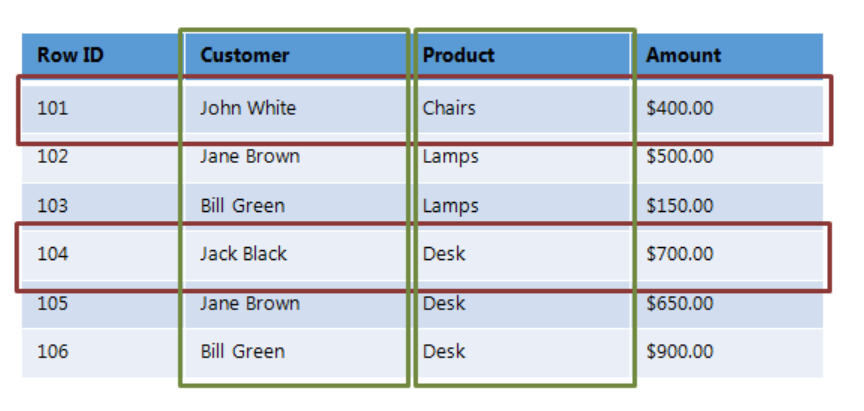

Let’s now take a look at how HBase (a column-oriented database) is different from some other data structures and concepts that we are familiar with Row-Oriented vs. Column-Oriented data stores. As shown below, in a row-oriented data store, a row is a unit of data that is read or written together. In a column-oriented data store, the data in a column is stored together and hence quickly retrieved.

Row-oriented data stores –

- Data is stored and retrieved one row at a time and hence could read unnecessary data if only some of the data in a row is required.

- Easy to read and write records

- Well suited for OLTP systems

- Not efficient in performing operations applicable to the entire dataset and hence aggregation is an expensive operation

- Typical compression mechanisms provide less effective results than those on column-oriented data stores

Column-oriented data stores –

- Data is stored and retrieved in columns and hence can read only relevant data if only some data is required

- Read and Write are typically slower operations

- Well suited for OLAP systems

- Can efficiently perform operations applicable to the entire dataset and hence enables aggregation over many rows and columns

- Permits high compression rates due to few distinct values in columns

- Log in to post comments